Confluent Platform is a full-scale data streaming managed cloud service that enables us to access, store, and manage data as a continuous, real-time stream. Confluent Cloud provides ksqlDB, a streaming SQL engine for Kafka. It is an easy-to-use yet powerful interactive SQL interface for stream processing on Kafka. This article will show how to automate Confluent ksqlDB deployments using ksql-migrations.

Pre-requisites:

1. Confluent account with a confluent cluster and ksqlDB cluster.

2. Confluent platform installed locally using docker.

3. Confluent CLI installed locally.

4. A Basic understanding of the Confluent platform and KSQL.

Setup GitHub repository

First thing first!

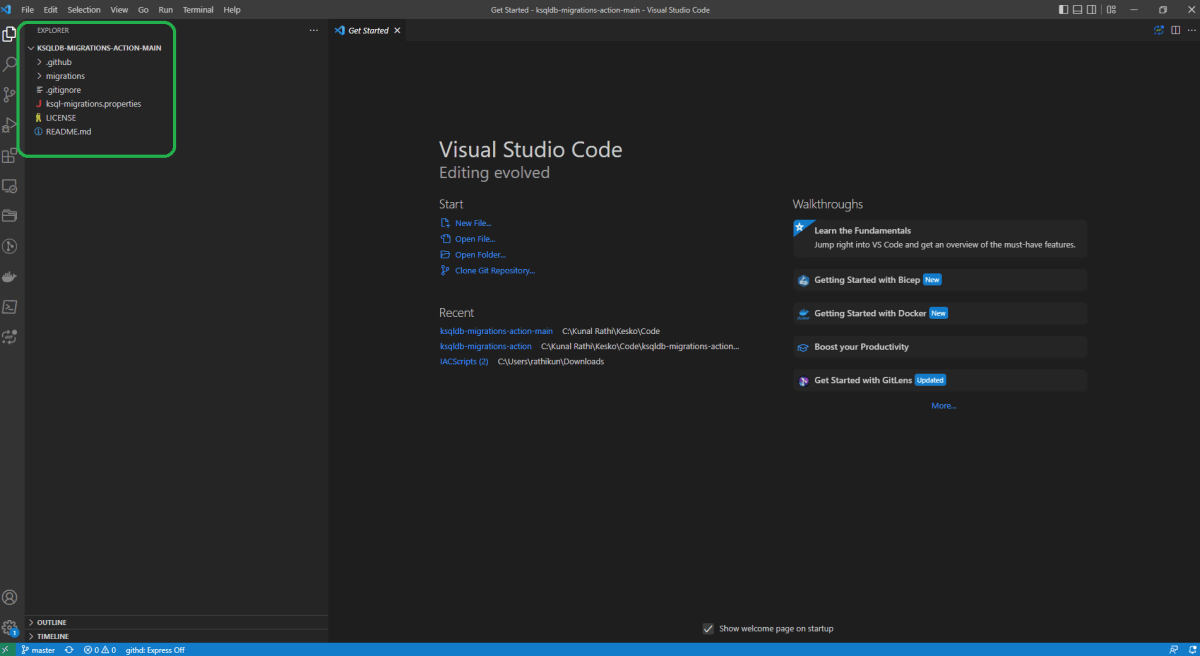

1. Fork the git repository jzaralim/ksqldb-migrations-action. This repository has most of the things we need to implement CICD for ksqlDB.

2. Clone the above repository locally using any client tools like VS Code.

Create a Confluent ksqlDB cluster API key.

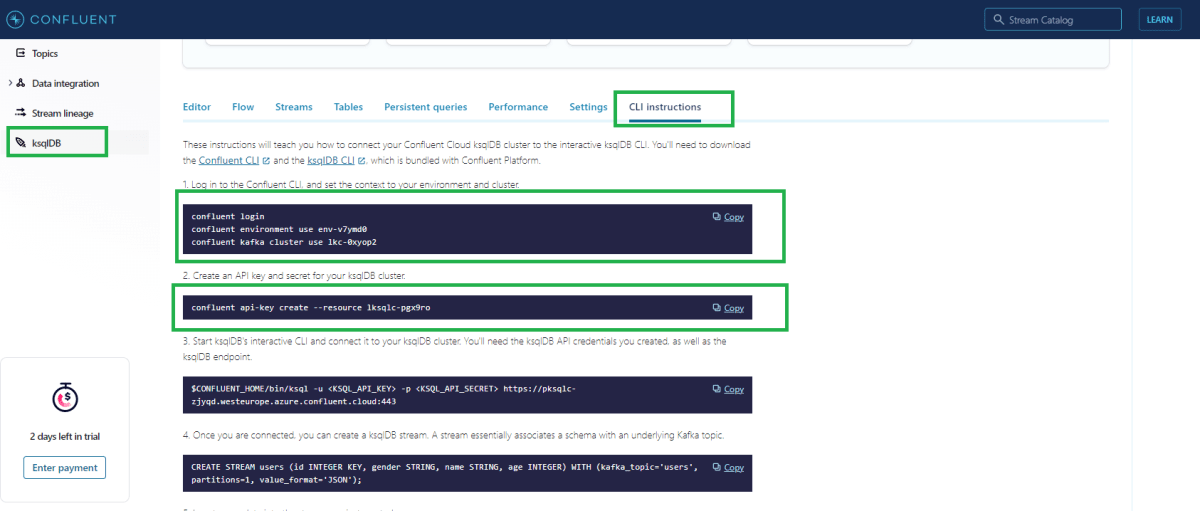

Login to confluent.io and navigate to the confluent cluster and ksqlDB cluster. Click on CLI instructions as shown in the below image. These instructions are specific to your selected Kafka and ksqlDB clusters, so you can copy and paste them into the Confluent CLI.

Follow the below steps to get the API key, API secret, and endpoint URL for the ksqlDB cluster.

1. Log in to the Confluent CLI,

confluent login

2. Set the context to your environment and cluster.

confluent environment use env-v7ymd0

confluent kafka cluster use lkc-0xyop2

3. Create an API key and secret for your ksqlDB cluster.

confluent api-key create --resource lksqld-pgx9ro

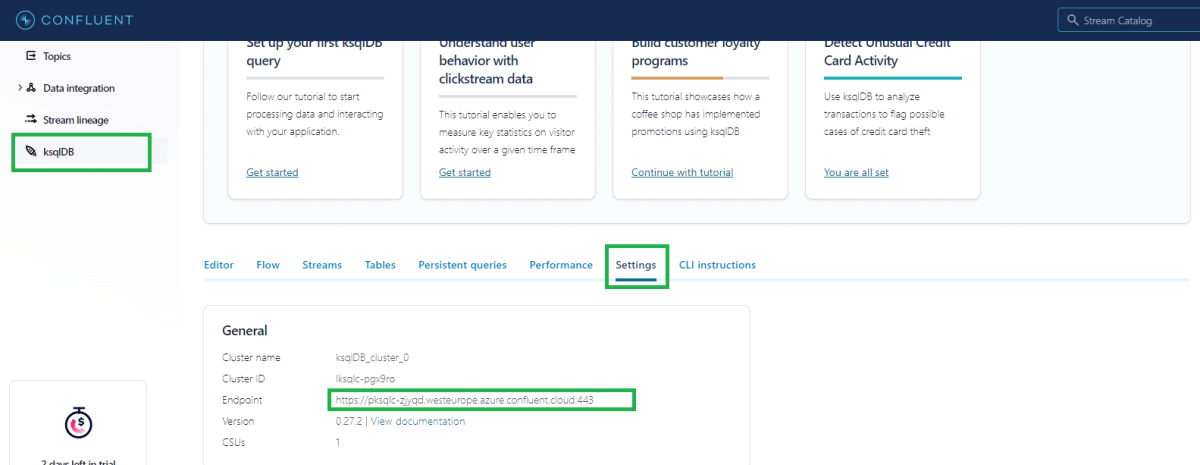

4. Get the ksqlDB cluster endpoint URL.

Save this information somewhere, as we will use them in the below steps.

Initialize ksql-migrations metadata

The confluent ksqldb-migrations tool uses MIGRATION_EVENTS & MIGRATION_SCHEMA_VERSIONS topics to maintain the deployment log for the deployments made through ksql-migrations. To create these topics in the confluent cluster, follow the below steps.

1. Connect to the local ksqlDB-server docker container using bash. If you are using windows bash, you can execute the below command.

docker-compose exec ksqldb-server bash

The ksql-migrations tool is available with all ksqlDB versions starting from ksqlDB 0.17 or Confluent Platform 6.2.

2. Execute the below command on the local ksqlDB-server container.

ksql-migrations new-project orders https://pksqld-zjyqd.westeurope.azure.confluent.cloud:443

Here, orders is the project’s name, and https://pksqlc-zjyqd.westeurope.azure.confluent.cloud:443 is the ksqldb cluster endpoint.

The above command will create order\migrations\ksql-migrations.properties file under the home directory in the ksqldb-server container as shown in the below image.

3. Edit the ksql-migrations.properties file with the following details. Values assigned to the variables are based on the name of the variables in the GitHub workflow file.

ksql.server.url=${CONFLUENT_KSQLDB_ENDPOINT}

ksql.migrations.topic.replicas=3

ssl.alpn=true

ksql.auth.basic.username=${CONFLUENT_KSQLDB_API_KEY}

ksql.auth.basic.password=${CONFLUENT_KSQLDB_API_SECRET}

ksql.migrations.dir.override=${CONFLUENT_MIGRATION_DIR}

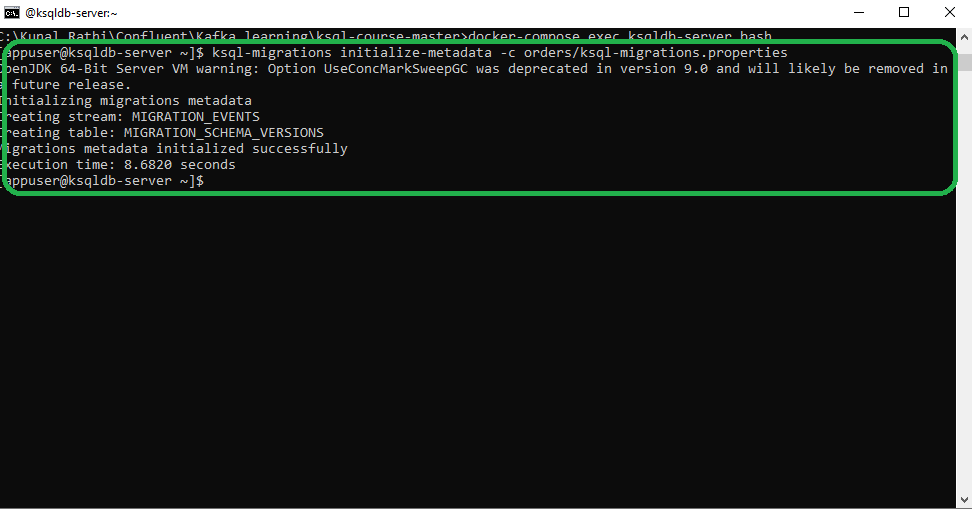

4. Now, run the below command to initiate migration metadata in the confluent cloud based on the configurations set in the above steps.

ksql-migrations initialize-metadata -c orders/ksql-migrations.properties

You should be able to see the below topic in the Confluent cloud.

Note: GitHub collaboration branch name and the workflow trigger branch name should be the same.

Set up GitHub secrets for GitHub actions.

Create GitHub secrets for the ksqlDB cluster endpoint, API key, and API secret, respectively named:

CONFLUENT_KSQLDB_ENDPOINTCONFLUENT_KSQLDB_API_KEYCONFLUENT_KSQLDB_API_SECRET

You have retrieved these secrets in the above steps.

We are all set to automate Confluent ksqlDB deployments

It’s time to verify our setup. Start committing changes to the migrations folder in the GitHub repository. For this demo, we will commit the three migrations files inside the ksqldb-migrations-action Github repository.

- After the initial commit, the migrate-cloud-ksqldb workflow will be triggered. It will deploy the initial three ksql migration versions to the Confluent cloud cluster.

- After migration ORDER_EVENTS stream would look like in the below image.

Let’s say, we need to add a column DESCRIPTION to the ORDER_EVENTS stream.

- Add a new version file V000004__add_column_to_order_events.sql.

- Commit the new version file, triggering a Github action, as shown in the image below.

Pro tips:

1. The steps mentioned in this article must be done only once by a DevOps person, and individual developers need only to commit changes to the migrations folder.

2. Format of the migrations version file is Vxxxxxx__name_of_the_file.sql. That means ‘V’ followed by an incremental six digits number with leading 0s. E.g., V000012__new_streams.SQL.

3. It is advisable to add the IF NOT EXISTS clause in CREATE STREAM/TABLE ksql statements. And IF EXISTS in DROP STREAM/TABLE ksql statements. This will avoid failures of version files in case of object availability conflict in the ksqlDB cluster.

4. Learn how to filter kstream by rowtime using ksql .

Kunal Rathi

With over 14 years of experience in data engineering and analytics, I've assisted countless clients in gaining valuable insights from their data. As a dedicated supporter of Data, Cloud and DevOps, I'm excited to connect with individuals who share my passion for this field. If my work resonates with you, we can talk and collaborate.